Game Guides

Dragon Dogma 2 System Requirements: Prepare Your Rig For Adventure

The first Dragon's Dogma game was a big action game with role-playing…

Okami HD PC Requirements: How To Run The Beautiful Adventure Game On Your Computer?

Okami HD is a remastered version of the critically acclaimed adventure game…

LEGO DC Super Villains Cheat Codes: Get Unlimited Studs, Red Bricks & More

The LEGO games show no signs of slowing down soon; in fact,…

Douchebag Workout 2 Cheat Codes: Secrets To Maxing Out Your Stats

Douchebag Workout 2 is one of the flash games that gained popularity…

News

Infection Free Zone’s Early Access Needs a Patch, Not a Pandemic

Infection Free Zone, now available on Steam Early Access, starts with a simple idea: Zombies have taken over the world,…

No Cuts Here! Stellar Blade Slashes Through Censorship in All Regions

Developer Shift Up has announced that Stellar Blade will have an "uncensored" release worldwide, including in Japan. This means that…

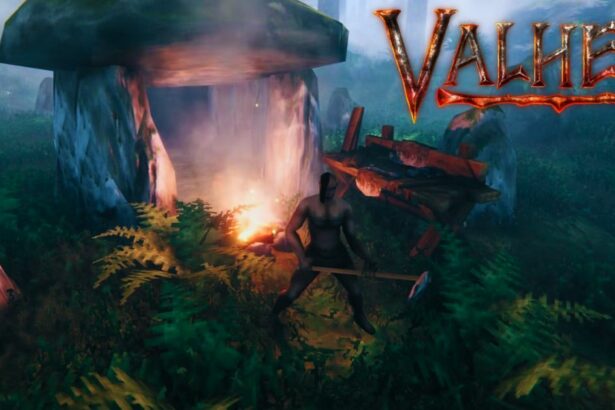

Valheim Gets an RPG Makeover: Players Dedicate 1,000 Hours to Epic Creation

Two Valheim enthusiasts, Ninebyte and Dhakhar, dedicated over a thousand hours across three months to build an entire RPG game…

Million Dollar Layoffs? CI Games CEO Hails Revenue Boom After Trimming Workforce

CI Games, the developer of Lords of the Fallen, has had its "best year" ever in terms of revenue, making…